The AI Stack in 2025: What Enterprise Teams Are Actually Using

The AI hype is finally translating into enterprise deployments—but with real stakes come real decisions. In 2025, enterprise leaders are no longer asking if they should invest in AI. They’re asking what tools, what frameworks, and what architecture will scale.

This post delivers a ground-up overview of the AI stack enterprise teams are actually using today—based on field insights, vendor trends, and our experience advising teams across industries.

You'll learn:

- The core layers of a modern AI stack

- Key tools being adopted in each layer

- Trade-offs between open vs. proprietary components

- Deployment patterns and governance must-haves

- What separates hobby projects from production-grade systems

Whether you're evaluating your first internal agent or scaling to dozens of LLM-enabled applications, this is your 2025 field guide.

🏗 The 7 Layers of the Enterprise AI Stack

Here’s how modern enterprise AI systems are being built—layer by layer.

1. Foundation Model Layer

This is the reasoning engine. Teams choose based on trade-offs in cost, control, security, and performance.

| Model Provider | Key Characteristics |

|---|---|

| OpenAI (GPT-4o) | Best-in-class reasoning, multi-modal, hosted via API |

| Anthropic (Claude 3) | Strong context length, safe defaults, growing enterprise features |

| Google (Gemini 1.5) | Native integration in Google Workspace, good for paired use cases |

| Meta (LLaMA 3) | Popular for fine-tuning, open weights, privacy control |

| Mistral / Mixtral | Efficient, multilingual, great for edge and private deployments |

Most orgs use a hybrid approach—mixing API-based models for external use and open models for internal data-sensitive workloads.

2. Retrieval + Knowledge Layer

LLMs without context can’t reason over your business. Enterprises are investing in retrieval-augmented generation (RAG) pipelines.

Popular tools:

- Weaviate, Pinecone, Qdrant — vector databases for embedding search

- Chroma — lightweight open-source option for internal teams

- LlamaIndex / LangChain Retrieval — orchestration and doc loading

- ElasticSearch + Hybrid Search — structured + unstructured blend

Key trends:

- Chunking & metadata tagging are evolving rapidly

- Streaming data and event-based ingestion are becoming common

3. Orchestration Layer

This is where the logic lives—how models are used, what tools they can call, how memory and agents behave.

Current leaders:

- LangChain — most feature-rich for chains, agents, memory, RAG

- CrewAI — framework for multi-agent orchestration

- Autogen by Microsoft — strong for collaborative agents in Python

- Dust — workflow-focused SaaS with developer-friendly agent logic

- Reka & Cognosys — fast-growing platforms with agent safety features

Many teams build internal wrappers around these frameworks to align with their own architecture and compliance protocols.

4. Tool + API Execution Layer

Enterprise agents don’t just generate text—they take action.

Common integrations:

- Internal REST APIs (CRM, ERP, ticketing, etc.)

- SaaS tools (Slack, Notion, Salesforce, Gmail)

- File systems, databases, cloud functions

Best practice: Role-based access control (RBAC) and principle of least privilege.

5. Guardrails + Observability Layer

This is where AI systems become safe, auditable, and trustworthy—a non-negotiable for enterprise use.

Tools & Approaches:

- Guardrails AI — output validation & schema enforcement

- Rebuff, ReAct Guardrails — input/output filtering

- Human-in-the-loop (HITL) approval pipelines

- Logging & tracing — LangSmith, PromptLayer, Traceloop

- Prompt versioning and policy dashboards

Without this layer, even well-built agents become liabilities.

6. Deployment + Hosting Layer

Your stack must fit your cloud, cost model, and compliance needs.

| Option | Use Case |

|---|---|

| API-based (OpenAI, Anthropic) | Fastest to prototype and iterate |

| Private LLMs via Azure/GCP | For regulated industries |

| Self-hosted (LLaMA, Mistral) | Best for privacy, edge, or cost control |

| Hybrid | Route to different models per task context |

Pro tip: Latency, caching, and retry logic are essential at scale.

7. Governance + Access Control Layer

This layer ensures AI systems are accountable and compliant.

- SSO + IAM for developers and users

- API usage metering and cost alerts

- Audit trails for prompts, completions, and actions

- Usage dashboards for executives

Governance is no longer a blocker—it’s your competitive edge.

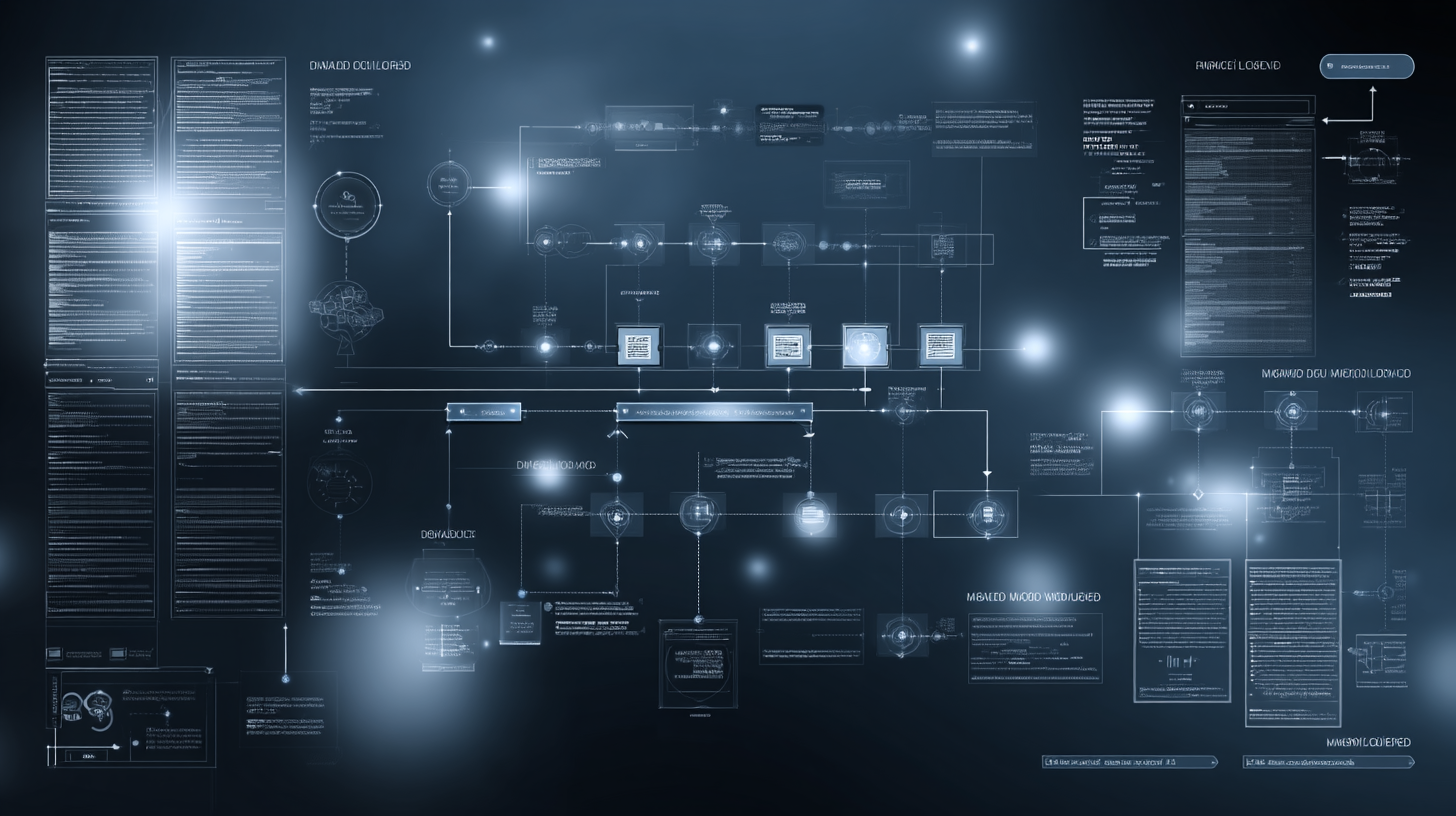

🧩 Reference Diagram: The AI Stack in 2025

(Insert diagram here: layered visualization of the stack with icons representing each layer)

🧠 Common Patterns in 2025 Enterprise AI

- Start with LLM + RAG before deploying full agents

- Use open models internally, hosted models externally

- Centralize embedding pipelines and vector stores

- Add prompt routing based on task complexity

- Observe before you scale — logs > vibes

🔄 API vs. Internal Agents: Choosing the Right Deployment Strategy

| Strategy | Pros | Cons |

|---|---|---|

| API Assistants | Easy to deploy, good for UIs | Limited autonomy, often brittle |

| Internal Agents | Richer actions, can operate in background | Requires more guardrails and validation |

| Multi-Agent Systems | Collaborative, good for complex workflows | Still experimental, performance varies |

📊 Enterprise Teams Using This Stack Today

- A Fortune 500 logistics company uses CrewAI and Weaviate to automate freight document processing across 3 continents.

- A financial services provider combines Claude 3 with internal APIs to create compliant customer onboarding flows.

- A healthcare platform uses LangChain, Guardrails AI, and Mistral for secure document triage with human-in-the-loop approvals.

The stack is real. The impact is measurable. The challenge is orchestration, not capability.

✅ Final Thoughts

The AI stack in 2025 is no longer a science experiment. It’s a blueprint.

Smart organizations are focusing less on building from scratch and more on integrating strategically—pairing the best models, tools, and frameworks with thoughtful architecture, observability, and governance.

If you’re still waiting for the perfect moment to invest in enterprise AI infrastructure, you’ve already fallen behind.

💬 Want Help Designing Your AI Stack?

InitializeAI helps enterprise teams plan, prototype, and scale AI systems with clarity, safety, and speed. Whether you're experimenting with internal agents or rolling out AI across departments, we can help.